Report

I scored top 65% ranking on the private leaderboard, which counts as the official score for this contest. Of 1,969 teams, my team (myself) ranked 1,261 using RMSLE as the measurement of accuracy with 0.56330.

For the public leaderboard however, I got 18% ranking instead with an RMSLE of 0.45970.

This second score is a lot better because I was able to submit my model for scoring, up to three times a day, and refine my model accordingly. At some point, I was was ranked top 11% but this was short lived, lasting no more than a week or so before some statistic giants woke up and ate my lunch.

Overview

The goal of this competition was to predict future inventory demand based on historical sales data. To do this, all participants were given 9 weeks of sales data. From this data, 7 weeks have adjusted demand provided in the form of inventory delivered broken down into units sold minus units returned. The other two weeks, however, do not include units returned. This is the target metric to work out. The difference between this and the units actually delivered would be both saved inventory and money saved.

Model scoring for this competition was done against two distinct test sets, one public and one private. Each team was allowed to submit 3 models per day which were evaluated and ranked against the public dataset. Before the end of the competition, each team was allowed to select two of their submissions to be scored and ranked against the private dataset instead. The best three submissions would receive prizes

Week 8 Predictions

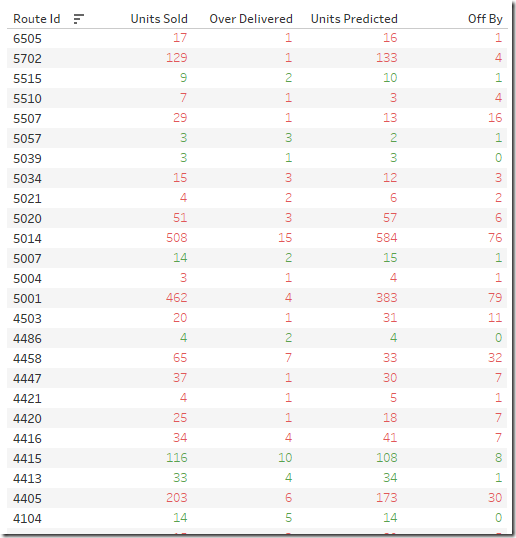

After the competition ended, I applied my model to predict adjusted demand for week 8. This allowed me to compare it’s performance against the actual deliveries made. Red highlights events where my prediction was worse than actual deliveries made and green highlights events where my model is an improvement over the actual deliveries made.

The first two metrics used here are simply units delivered and how much off they were and the last two are my predicted value and how much off it was. Whenever my prediction is closer to actual demand, my model wins.

First off, I compare my model’s performance for clients.

Figure One – Units Sold and Over Delivered (returned) versus Units Predicted. ‘Off by’ is the count the prediction is off from actual demand.

Figure Two – Money for Units Sold and Over Delivered (returned) versus Money for Units Predicted. ‘Off by’ is the amount the prediction is off from actual demand.

In general, each route servers one or, at most, a few customers. I am assuming that one route also means one driver. This driver is the individual who estimated the number of units to deliver per product to each customer. Because of this, I decided to look at the routes as well.

Figure Three – Units Sold and Over Delivered (returned) versus Units Predicted. ‘Off by’ is the count the prediction is off from actual demand.

It is clear Grupo Bimbo’s actual delivery estimation is not bad by any means. Note how, the higher the sales are, the better their predictions are! In contrast, my model is just a bit better at predicting when unit sales are smaller.

Figure Four – Money ($ Mexican Pesos) for Units Sold and Over Delivered (returned) versus Money for Units Predicted. ‘Off by’ is the amount the prediction is off from actual demand.

Note how, the higher the sales are, the better their predictions are! In contrast, my model is just a bit better at predicting when unit sales are smaller.

If anything, the current method Bimbo Group has (Sold amount determined by route drivers) is not inaccurate by any measure. I would like to see this same overview provided by top scoring Kagglers to compare their model’s performance.

Retrospective

Going into this competition, I spent a lot of time marveling at the Kaggle-Experience. From scouring the forums full of helpful peers to previous competitions and the insight and accuracy some teams were able to bring to the table and freely share. I also focused, perhaps too much, on improving my score. This is something that, unless I am a money contender, mattered little in the end.

Lesson – My time would have better been spent reading more and learning about different ways others were solving this problem.

Another thing I obsessed about was the time it took to complete a training scenario under different parameter sets. This proved to be a big distraction into hardware, etc. This could also be very expensive. Once I had decided which parameters and features to use and examine, modeling took about 25 minutes per experiment. The better and more efficient my model, the less hardware resources consumed. Also, although longer training times proved promising, better models were often simpler and train faster. Bad models, on the other hand, would just crash my R Session after the 45 minute mark or so.

Lesson – Hardware does not make you better. It just allows bigger errors and distracts you from the problem at hand.

I should have trusted more my cross validation script results. I ran several iterations with different features but discarded findings because they did not perform as well against the public dataset. Instead, I focused all my time into improving my one ‘score’ against the public dataset and submitted my two best scoring models for this. This effectively wastes one shot.

Lesson – I should have submitted one model with my best score against the test set and another model based on my cross validation script.

Another big unknown to me was how to use the information provided in the dataset. Using ALL the information would require lots of hardware resources; the complete dataset has 70 million records plus. It is a lot more reasonable to use only the data points that matters but determining the right set is a rather open ended exercise.

Lesson – Given the problem and the data provided, spend as much time as possible determining trends between the complete set given, the train dataset, and the incomplete set, the test set. Once similarities between these is established, use the extra information included in the train set to better model the problem in the test set.

Lastly, I only measured my model’s performance against the public set provided by Kaggle and relied on just submitting it for scoring against their service. I should have spent more time testing the model myself using the extensive train set provided. This would have let me better focus the solution to the problem at hand (instead of focusing so much in the public set).

Lesson – Testing on given dataset would’ve lessened my reliance on the public set which was only part of the story.

Next Steps

Enroll in an R-programing course or work thru a soup-to-nuts R programming book. Maybe use for a project at work…

Tech Details

All work was done in a VM Server with an AMD A8-5600K quad core processor overclocked to 4.3 GHz and 32 GB memory. A Linux instance was given all available resources and ran RStudio mostly by itself. Some analysis was done in an Oracle DB as well because I am a lot more comfortable in a db server than in R. I am working on this gap.

Modeling would tax the processor at 100% utilization and consume 24 GB of memory usage. The larger and least optimized models would take longer and consume more resources as expected (and often crash my R Session). My best models would peg CPU for about 20 minutes and consume a bit less than 20 GB of memory.

Overheating was a problem initially. This was partly due to dust in case, but heat had never been an issue for this computer before. Installing an aftermarket CPU cooler resolved this.

Here is a before and after picture set. The first picture shows the original heatsink minus the fan and the second picture shows the new heatsink and fan combo.

this change also allowed me to overclock the system from 3.6 GHz to its maximum allowable of 4.3 GHz. It has run cool ever since without crashes or stability issues. Its like a poor man’s CPU upgrade, neat!

I made 28 submissions for the span of the competition and spent countless nights on these (side project for half a summer). Except for the first one, all of these submissions were made using Extreme Gradient Boosting (XGBoost) as the learning algorithm. The first one, which had a dismal score, was made using Random Forest instead.

Lastly, I’ve posted all my code over at GitHub. This is my best submission.

Why Did I Do This?

I was reading Data Smart for fun. I had previously watched a YouTube Series, Intro to Data Science with R going over the Titanic Dataset with Random Forest and had read up a very insightful series on this data set, Titanic: Getting Started with R. Finally, at the beginning of summer, I got an email from Kaggle inviting me to join their upcoming competition for Bimbo Group.